Modern smartphones and laptops, for all their shine, rely on foundational technologies developed way before the invention of the World Wide Web. In these notes we take a look back and see how computer architecture was developed over time.

Vacuum tubes (1943-1955)

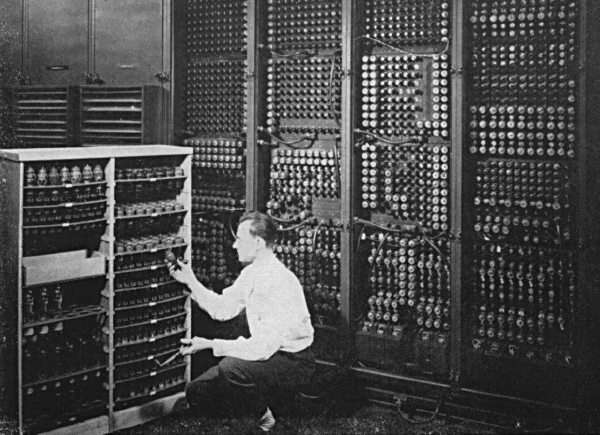

Replacing a broken vacuum tube in ENIAC (1945) ↪

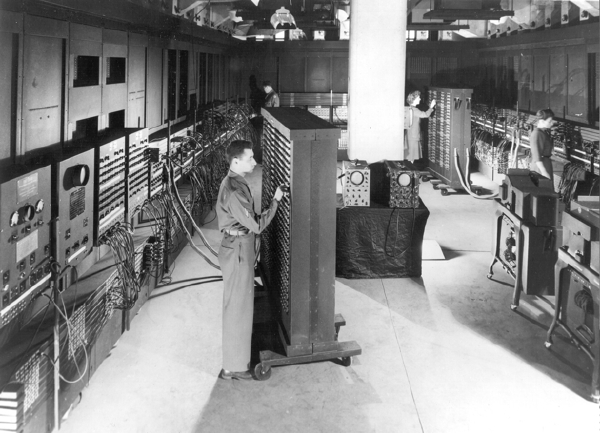

“Programming” ENIAC (1945) by setting function table switches ↪

The basic building block of all computers is the electronic switch: a device which starts or stops the flow of electricity based on a control signal. (Same as a light switch on the wall, but controlled by a separate electrical signal rather than a mechanical connector.) In the first generation of general-purpose computers, each electronic switch is a physical vacuum tube.

Vacum tubes take a lot of space and are inefficient: they need a lot of power and lose much of it via heat, which occasionally burns the tube.

The computers of this era are programmed using physical modifications to the machine: by changing the wiring and adjusting arrays of switches. Changing the physical configuration of ENIAC for each new task takes around 2 weeks. There are no “operating systems”.

Hardware technology

Electronic switch: vacuum tube Memory: none

Programming languages

🚫 Not yet. Computers programmed using switches and plugboard wiring.

Operating systems

🚫 Not yet.

Mainframes, transistors, and random-access memory (1955-1965)

The invention of the transistor changes the game of computer hardware. Same as the vacuum tube, a transistor is an electronic switch, but does the “switching” using semiconductors: materials that naturally conduct or resist electricity depending on whether electric field is applied to them (as if they were intended by nature to serve as electronic switches). Thanks to this property of semiconductors, transistors can be much smaller and more efficient that vacuum tubes.

The first generation of transistor-based computers are used for batch processing – there are no interactive terminals available yet, so the only way to interact with the machine is to load a task (for example, by inserting punch cards into a reader) and wait for the results to arrive.

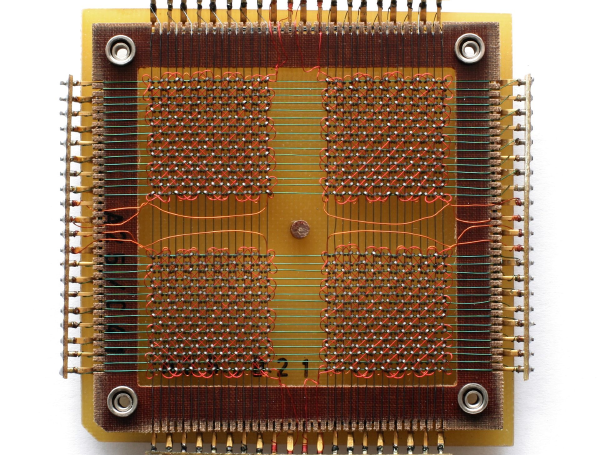

Thanks to the invention of random-access memory, computers can now store programs and data in a magnetic core array, rather than only being able to execute programs as they are read. This leads to development of the first “operating systems” – ie. programs loaded before the actual user program, intended to facilitate the operation of the machine.

Thanks to that, first programming languages can be developed in turn. The release of the IBM 709 computer in 1957 introduces at the same time the programming language Fortran, and the Fortran Monitor System, an early “operating system”-type program whose sole purpose was compiling and running Fortran programs.

(The 709 was still a vacuum tube-based computer, but Fortran was soon adopted in the modern transistor-based computers as well.)

Hardware technology

Electronic switch: transistor Memory: magnetic core

Programming languages

- Fortran (1957)

- COBOL (1959)

Operating systems

- FMS (1957)

- IBSYS (1960)

Minicomputers, integrated circuits, terminals, Unix (1965-1980)

A few related innovations transform computers in the next 25 years, and lay technological foundations that still stand firm today.

On the hardware level, the key invention is the integrated circuit: rather than building computers from individual transistors, circuits of transistors are now manufactured as one integrated unit (chip), improving performance and reducing costs.

Improved performance highlights the limitations of batch processing: when the computer processes tasks sequentiallly, only one user at a time can benefit from it. This means that debugging cycle is slow: if your program crashes it may take hours or days before it’s your turn to try again. This led to the invention of multiprogramming: a model in which multiple programs can run in parallel, with the operating system in charge of allocating chunks of the processing time to each program.

Multiprogramming, in turn, enabled the advent of terminals – now that the computer is able to run multiple programs “at the same time” (in fact running only one program at a time, but frequently switching between programs), it is now possible to have the computer work on something useful while the user is thinking about what to type into the terminal.

On the operating systems side, the most lasting development of this era is the invention of UNIX. In the landmark paper introducing UNIX, two of its authors pitched the new system as cost-effective and easy to use:

Even though UNIX is based on previous work on multiprogramming systems, it becomes the first multiprogramming operating system to enjoy wide adoption first across academia and then the business world.

Hardware technology

Integrated circuits.

Operating systems

- UNIX (1969)

Personal computers (1980+)

IBM PC, first comercially successful personal computer ↪

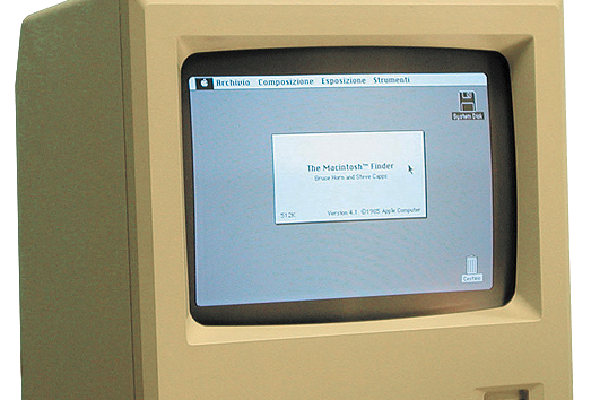

Apple Macintosh, first commercially successful graphical interface computer ↪

Even though multiprogramming of the minicomputer era allowed more people to share access to the increasingly powerful machines, wider adoption of computers remained restricted by hardware costs. A typical computer user in the 70s is an employee of a computing department of a big company, or a student of electrical engineering at a top university. This changes during the 80s, when the price of a functional general-purpose computers for the first time falls first below 2000 dollars, and eventually to a few hundred dollars, making the devices available to hobbysts and entrepreneurs.

The era of personal computing starts with IBM PC . IBM, an established provider of large, commercial computer systems, develops a computer intended for the general public, for the first time selling it via retail shops, rather than directly to consumers. The IBM PC comes with the MS DOS operating system, famously licensed to IBM by Bill Gates, who had bought it for the occassion from a small computer manufacturer from the Seattle area. IBM PC is a massive success, with deliveries reaching 40 000 PCs a month.

MS DOS is able to run graphical software, but the default interface presented to the user is a text-based terminal. Apple recognizes an opportunity to make computers more friendly to casual users and introduces the first commercially successful computer featuring a graphical user interface (GUI) with windows and icons in Apple MacIntosh (1984). Microsoft responds with Windows (1985) and by the 90s the GUI is the norm in personal computing.

Meanwhile, Unix remains a popular system in both academia and commercial applications. However, it splinters into family of related and not-exactly-compatible versions maintaned by different entities, two major ones being University of California and AT&T.

In early 90s Linus Torvalds develops a free implementation of a Unix-like operating system, which quickly builds up a critical mass of adoption and within a decade becomes the dominant Unix-based operating system. Today, the legacy of Unix is as strong as ever, with Linux widely used on the servers in datacenters around the world, and various Unix-based operating systems such as Android, iOS, ChromeOS and Mac OS X powering billions of personal computing devices.

Hardware technology

Integrated circuits (increasignly smaller and more powerful).

Operating systems

- MS-DOS (1981)

- Mac OS (1984)

- Linux (1991)

- Windows 95 (1995)

Conclusion

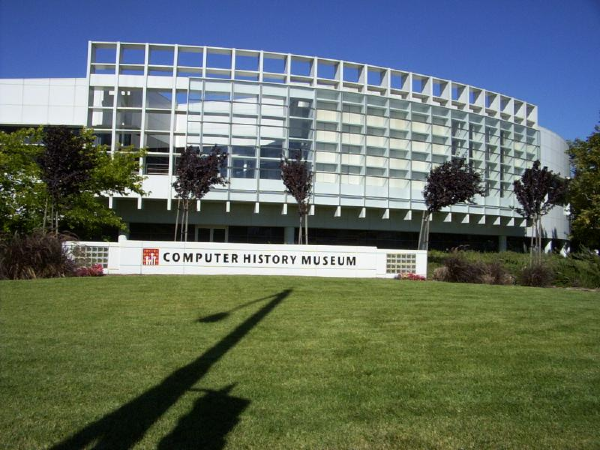

Computer History Museum in Mountain View, California ↪

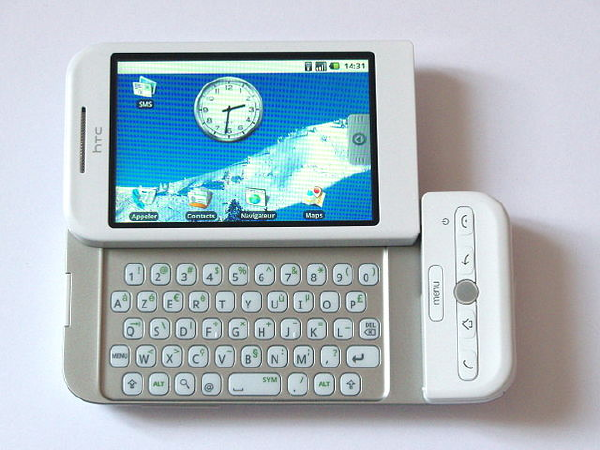

HTC Dream, an early Android smartphone (2008) ↪

For all it’s fancy shine, every smartphone is a computer history museum, using the foundational technologies developed over the decades:

- electronic switches developed in the 40s (originally using vacuum tubes, later replaced by transistors that we use to this day)

- multiprogramming (software paradign that allows the device to run multiple apps in parallel), developed in the 60s

- Unix family of operating systems (both Android and iOS are derived from Unix), developed in early 70s

- graphical user interface – first successfully commercialized in the 80s

- the web, invented in the 90s

- capacitive touchscreens, first commercialized in the 2000s

All of these come together to give us the seemless experience of modern-day personal computing 💫.