In this note we discuss how to configure a Django application running on App Engine Standard, so that media (user-uploaded) files are stored in Google Cloud Storage. (If you’re just getting started with Django on App Engine see also the general introduction here .)

Django files and App Engine Standard

There are two types of files that any Django app needs to reason about: those

known ahead-of time (static files) and those not known before deployment

(user-uploaded files, sometimes called “media files” because in Django they

are configured

using

settings MEDIA_ROOT and MEDIA_URL). Here we look specifically at how to

handle the user-uploaded files.

On App Engine, the application doesn’t have access to the local filesystem. Static files are served directly from the application image, while user-uploaded files need to handled… elsewhere. Within GCP, Google Cloud Storage is the canonical solution for durable file storage and below we explain how to use if as a backend for the user-uploaded files in our application.

Google Cloud Storage and bucket creation

Google Cloud Storage stores files in buckets. We can have one or more buckets per project – so in particular, we can create a dedicated bucket for user-uploaded files (regardless of whether we already have any buckets for other purposes or not).

To create the bucket, we go to Storage in the Cloud Console and click “Create bucket”.

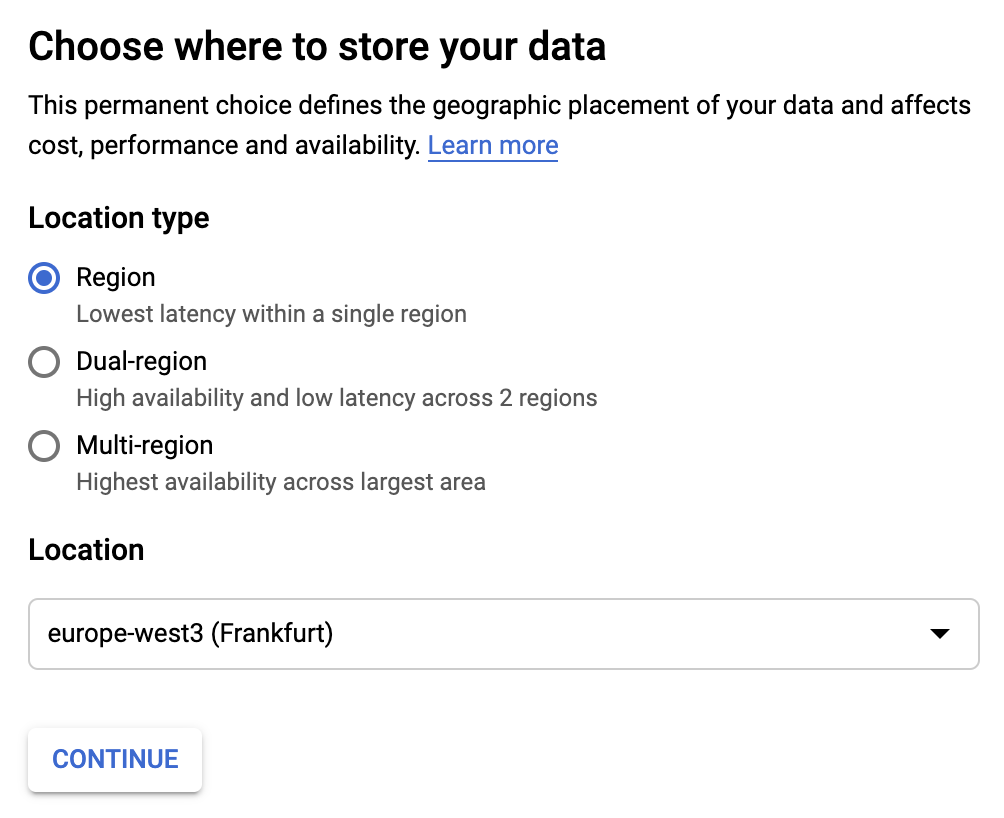

During the bucket creation flow, we get to pick:

- the name for the bucket – needs to be globally unique across all GCP projects

- the physical location in which the bucket content is going to be stored. The locations can be regional (associated with a particular geo location), multi-region (spanning a large geo area such as Europe or United States). To be clear, whatever we choose, the files are going to be available to users globally – but putting them close to where most of the users are will improve serving latency. (more information on that in the official docs )

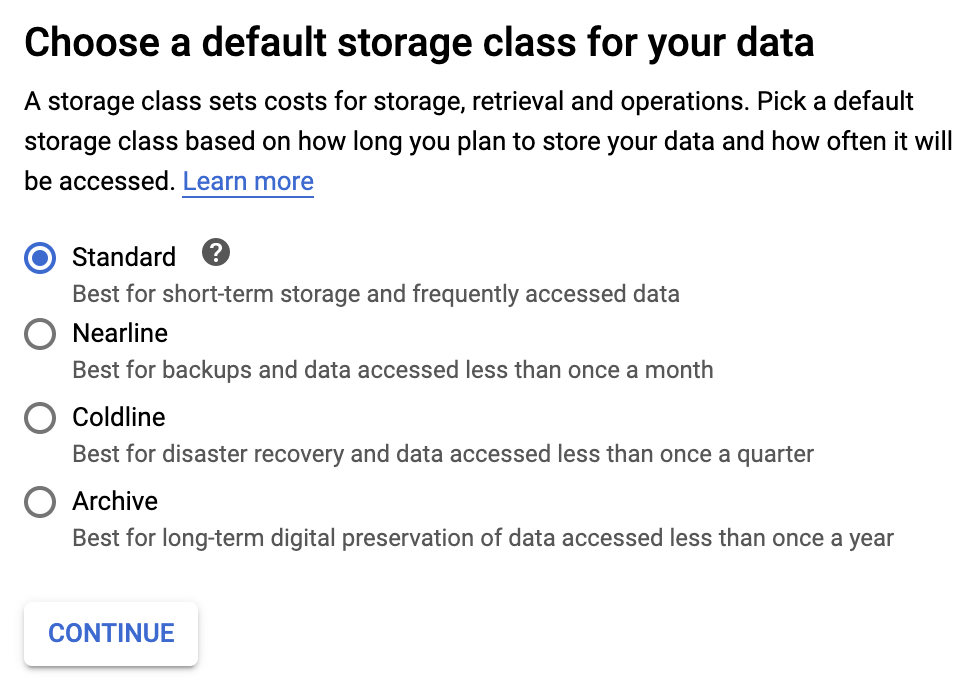

- the storage class, which determines the latency of retrieving the files and the storage price

- access policy, either - fine-grained, meaning that each file has separate permission set associated with it, or - uniform, meaning that all files in the bucket will be governed by the bucket-level permission set

In principle, the uniform access policy seems appealing. However, I found

that with django-storages it’s easier to set up credentials if

fine-grained permission is set – see the section below for why is that.

[optional] Import existing files

If we’re setting this up for an existing app, we will likely want to migrate the

existing files to the new bucket. From a directory storing those files, we can

upload them using gsutil:

gsutil -m cp -a public-read -r <directory> gs://<bucket name>

Note that -a public-read makes the files immediately world-readable to

anyone who has the right URL.

django-storages and application settings

django-storages implements Django integration for multiple cloud storage backends, including Google Cloud Storage, and we can use it to enable storing user-uploaded files in the bucket we just created.

pip install django-storages

After adding the dependency, we can edit settings.py:

if os.getenv('GAE_APPLICATION'):

DEFAULT_FILE_STORAGE = 'storages.backends.gcloud.GoogleCloudStorage'

GS_BUCKET_NAME = '<bucket name>'

GS_DEFAULT_ACL = 'publicRead'

MEDIA_URL = 'https://storage.googleapis.com/<bucket name>/'

else:

PROJECT_PATH = os.path.join(os.path.abspath(os.path.split(__file__)[0]), '..')

MEDIA_ROOT = os.path.join(PROJECT_PATH, 'site_media')

MEDIA_URL = '/site_media/'

In the snippet above, we configure different setup when running locally for

development purposes, so that the development server manages the user-uploaded

files locally under site_media.

[optional] bucket prefix

If, rather than storing the files immediately in the top-level folder of the

bucket, we have all of them under <optional prefix> (without leading or

trailing slashes), we just need to make two changes:

- set

MEDIA_URLtohttps://storage.googleapis.com/<bucket name>/<optional prefix>/ - add

GS_LOCATION = <optional prefix>

Bucket permissions and access credentials

In the case of my app, the user-uploaded files of the app are meant to be publicly visible on the internet, so I wanted to have all files writable only by the app and publicly readable on the Internet.

One of the benefits of hosting our app on App Engine and using Google Cloud Storage for user-uploaded files, is that the app already has the right Google Cloud credentials set up when running on App Engine that allows it to authenticate requests to Google Cloud Storage. So we should not need to follow the authentication steps of the django-storages documentation that speak about creating a service account and adding the corresponding key via GOOGLE_APPLICATION_CREDENTIALS.

In practice, I found that this is indeed the case – but only if the bucket access policy is set to fine-grained and we set GS_DEFAULT_ACL = ‘publicRead’. Here’s what’s going on.

If we set the bucket permissions to uniform and GS_DEFAULT_ACL =

‘publicRead’, django-storages request to add a file fails with a 4xx error,

indicating that the bucket has uniform permissions so we can’t set an ACL on it.

Then we’d think that leaving GS_DEFAULT_ACL = None would work, but it doesn’t

for different reason:

File "/env/lib/python3.7/site-packages/google/cloud/storage/_signing.py", line 55, in ensure_signed_credentials

"details.".format(type(credentials), SERVICE_ACCOUNT_URL)

AttributeError: you need a private key to sign credentials.the credentials you are currently using <class 'google.auth.compute_engine.credentials.Credentials'>

Without the ACL being set to public, django-storages assumes it’s private and attemps to use the private key to sign the request, which fails because we don’t have the credentials configured.

Fortunately, the workaround is easy enough: set GS_DEFAULT_ACL = 'publicRead'

and make sure the bucket has the access control policy set to “fine-grained”.

Conclusion

And that’s it, user-uploaded files should now “just work”!