In the fastai course , Jeremy Howard suggests using Conda for managing the local installation of PyTorch. The advantages of this approach are that conda creates a fully hermetic environment and the resulting installation comes with working GPU support.

Personally I prefer to use pip to manage packages. The good news is as of 2024, a pip-based setup can also be hermetic and have working GPU support! Here’s how to set it up on Apple Sillicon Macs 💫.

Python

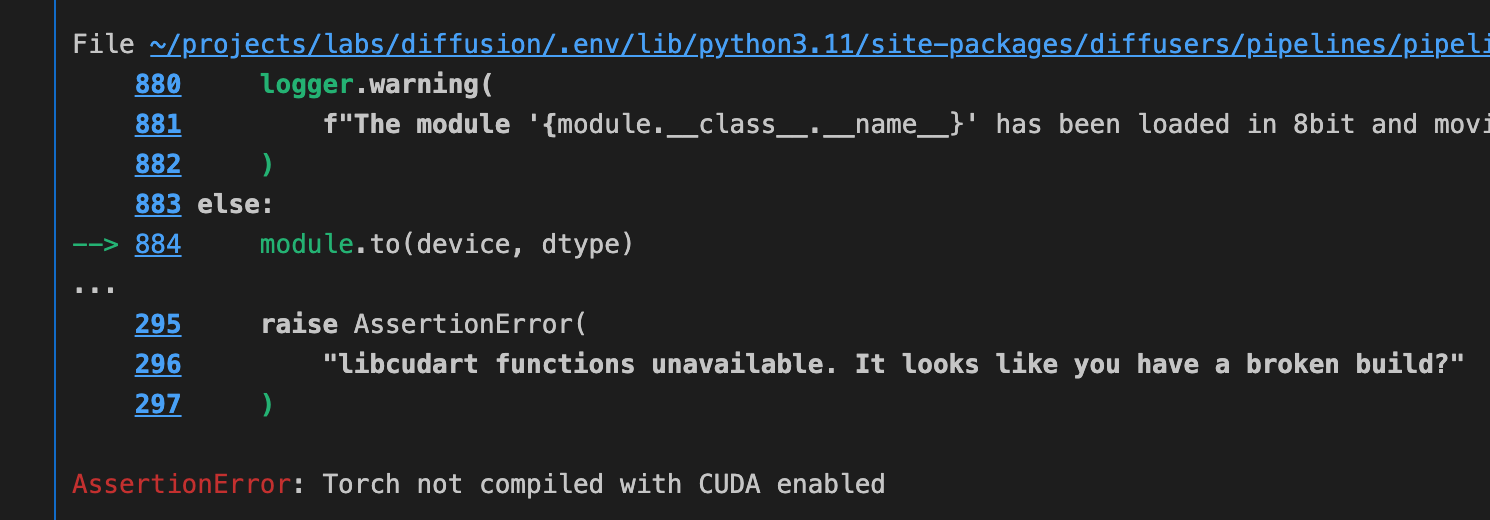

First, we need a Python installation. Let’s get it from from the official website . (Don’t use Python that comes pre-installed with the system. It’s often old and it’s used for the operating system internal needs. We don’t want to mess with it.)

How to pick the right version? This post recommends getting the N-1 version, to avoid potential compatibility issues with libraries not ready to support the last release. As of October 2023 the latest is 3.12, so we’d get 3.11.

After the installation, put something like:

export PATH="$PATH":~/Library/Python/3.11/bin

in your .bashrc / .zshrc, and we’re good for this step.

venv

Now let’s create a virtual Python environment.

We want to use a separate hermetic Python environment for each project, so that they don’t interfere with each other. The only package that we will install globally, in the Python environment we just set up, is the package needed to manage virtual environments:

$ python3.11 -m pip install --user virtualenv

Then we can go to the project directory and start the new virtual environment:

$ python3.11 -m venv .env

From now on, make sure that any time we run any Python code, command or tool with any relation to the project, we’re doing this in a session where we first activated our environment:

$ source .env/bin/activate

PyTorch

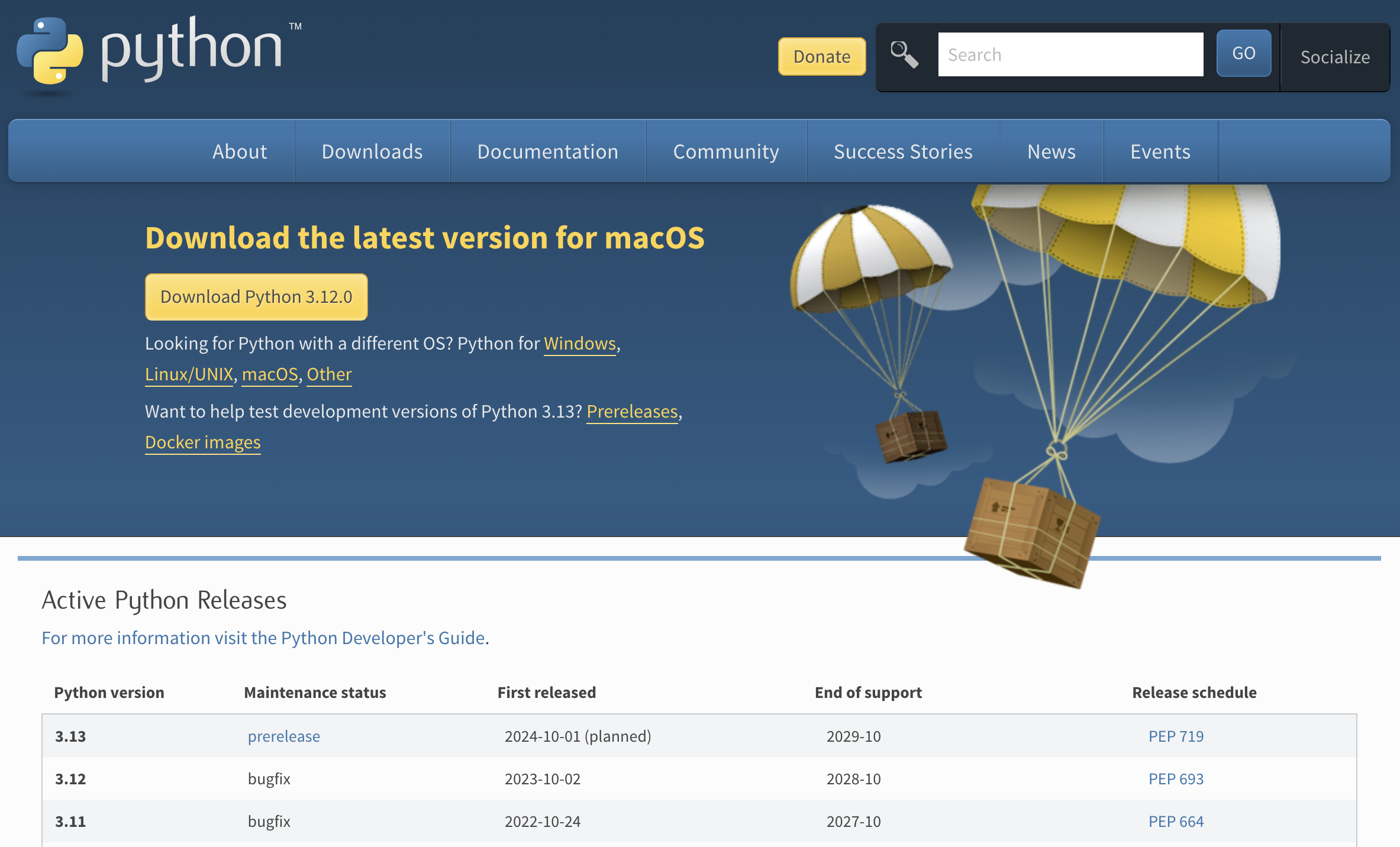

On the PyTorch website , I went with what is now the default configuration:

Make sure to run this and other pip commands within the virtual environment as noted above.

$ pip3 install torch torchvision torchaudio

MPS / metal support

We can run this program to see if the MPS support appears as available:

import torch

# Check that MPS is available

if not torch.backends.mps.is_available():

if not torch.backends.mps.is_built():

print("MPS not available because the current PyTorch install was not "

"built with MPS enabled.")

else:

print("MPS not available because the current MacOS version is not 12.3+ "

"and/or you do not have an MPS-enabled device on this machine.")

else:

print("all good")Troubleshooting

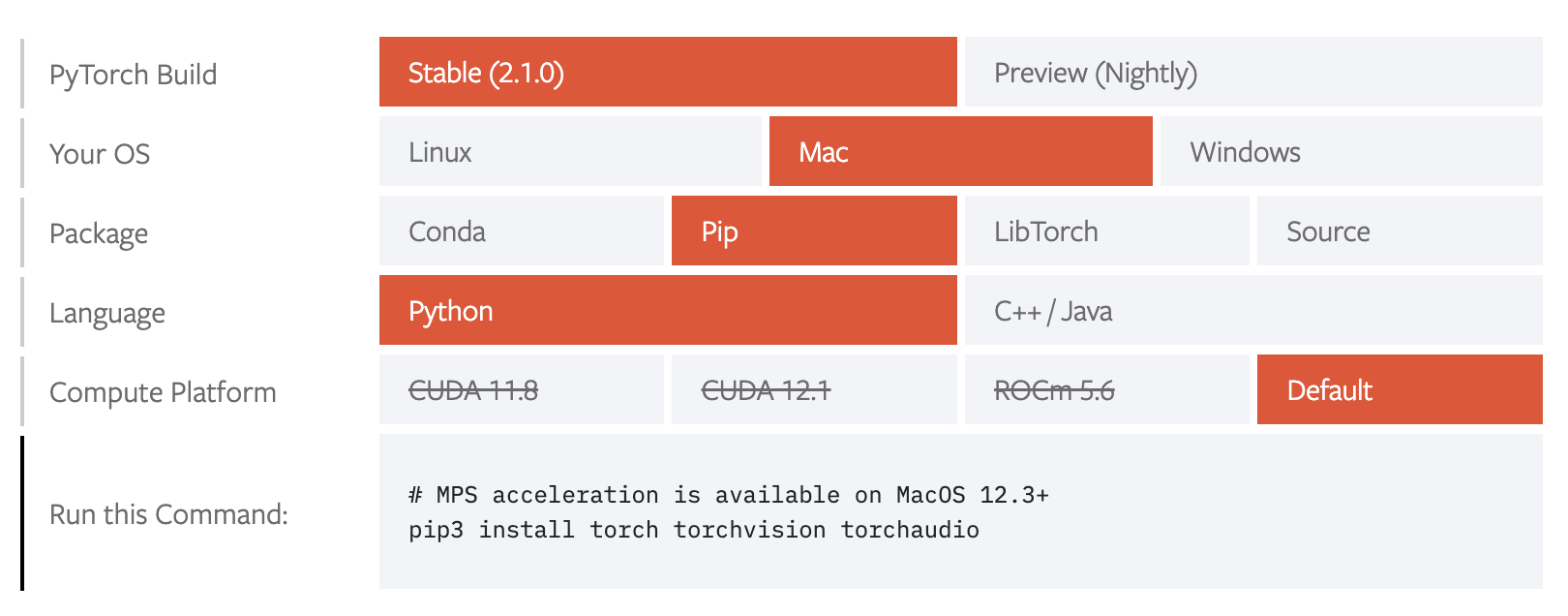

AssertionError: Torch not compiled with CUDA enabled

Whevener we’re running code that specifies the device on which the pipeline runs, make sure it has the right GPU device. Most examples you’ll find will be referencing cuda, like here:

pipe = StableDiffusionImg2ImgPipeline.from_pretrained(

"CompVis/stable-diffusion-v1-4",

variant="fp16",

torch_dtype=torch.float16,

).to("cuda")Just replace cuda with mps everywhere and it’ll work better.

unimplemented _linalg_solve_ex

When trying to run my fastai notebook locally using Jupyter, I hit a PyTorch gap in its support for Apple sillicon:

NotImplementedError: The operator 'aten::_linalg_solve_ex.result'

is not currently implemented for the MPS device.

This is tracked as pytorch

issue #98222

. In the

meantime, the workaround is to set the environment variable

PYTORCH_ENABLE_MPS_FALLBACK, enabling a CPU fallback for missing

functionalities.

You can set the environment variable in your program:

os.environ['PYTORCH_ENABLE_MPS_FALLBACK'] = '1', or when starting the jupyter server:

$ PYTORCH_ENABLE_MPS_FALLBACK=1 python -m jupyter notebook

RuntimeError: MPS backend out of memory

I ran into this issue using the HuggingFace transformers to fine-tune DeBERTa-v3 for the Kaggle Disaster Tweets challenge . For once, the error message is pretty descriptive and even suggests how to fix the issue.

RuntimeError: MPS backend out of memory (MPS allocated:

2.42 GB, other allocations: 15.38 GB, max allowed: 18.13 GB).

Tried to allocate 375.29 MB on private pool.

Use PYTORCH_MPS_HIGH_WATERMARK_RATIO=0.0 to disable upper

limit for memory allocations (may cause system failure).

I tried to be smarter and set the PYTORCH_MPS_HIGH_WATERMARK_RATIO to a higher value that nevertheless keeps the memory capped, for example 0.5. However, this:

os.environ['PYTORCH_MPS_HIGH_WATERMARK_RATIO'] = '0.5'Results in another error in trainer initialization:

RuntimeError: invalid low watermark ratio 1.4

I have no idea why setting PYTORCH_MPS_HIGH_WATERMARK_RATIO to 0.5 causes this other internal variable to be set to 1.4 (and why this is not OK). In the end the only way I found to get all of this to work was to follow the instruction from the error message and take the risk of unbounded RAM use.

os.environ['PYTORCH_MPS_HIGH_WATERMARK_RATIO'] = '0.0'But hey, it’s the operating system’s job to prevent one memory-hungry process from taking down the entire system. In the OS we trust.

Conclusion

That’s it 💫. This Python setup gives us a hermetic environment for any PyTorch project on a Macbook. It has GPU support and it doesn’t require Conda. Enjoy and happy training!